Machine Learning has made our lives more productive from hailing a ride via Uber’s advanced ML-driven rider and driver matching, or Google Now predicting information you’d need before you need it. Machine learning has also made our lives safer allowing people to rent strangers’ houses via Airbnb or reducing the risk of fraud during online purchases. Recent advances in deep learning have brought more new technologies within our reach including self-driving cars, machine translation, predicting weather several years ahead, automated stock trading and more! In this track, come hear from practitioners about some interesting applications of machine learning and recent practical advances in deep learning.

Track: Machine Learning 2.0

Location: Majestic Complex, 6th fl.

Day of week:

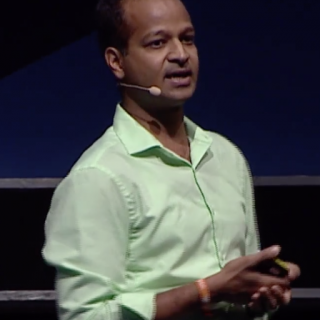

Track Host: Soups Ranjan

Soups Ranjan is the Director of Data Science at Coinbase, one the largest bitcoin exchanges in the world. He manages the Risk & Data Science team that is chartered with preventing avoidable losses to the company due to payment fraud or account takeovers. Soups has a PhD in ECE on network security from Rice University. He has previously led the development of Machine Learning pipelines to improve performance advertising at Yelp and Flurry. He is the founder of RiskSalon.org, a round-table forum for risk professionals in San Francisco to share ideas on stopping bad actors.

Semi-Supervised Deep Learning for Climate @ Scale

Climate change is one of the most important problems facing humanity in the 21st century. Climate simulations provide us with a unique opportunity to understand the evolution of the climate system subject to various CO2 emission scenarios. Large scale climate simulations produce 100TB-sized spatio-temporal, multi-variate datasets, making it difficult to conduct sophisticated analytics. In this talk, I will present our results in applying Deep Learning for supervised and semi-supervised learning of extreme weather patterns. I will briefly highlight our efforts in scaling Deep Learning to 9000 KNL nodes on a supercomputer. The audience will gain a better appreciation for the breadth of problems for which Deep Learning has been successfully applied, and insights into open challenges for this emerging field.

Solving Payment Fraud and User Security with ML

Coinbase is the one of the largest digital currency exchanges in the world. We store about $1B of digital currency (bitcoin, litecoin, ether) on behalf of our users. Given the instant nature of digital currency and that it can't be reversed, we have one of the hardest payment fraud and security problems in the world. We are hit by the most sophisticated scammers constantly and consequently we are at the forefront of the fight against fraudsters and hackers. We've witnessed and solved loopholes exploited by fraudsters years ahead of the broader industry (e.g., vulnerabilities in second-factor tokens delivered by SMS, phone porting attacks, loopholes in online identity verification, etc.). I'll talk about our risk program that relies on machine learning (supervised and unsupervised), rules-based systems as well as highly-skilled human fraud fighters. I'll present attack trends and techniques we've seen through the past years and how the entire system has worked in cohesion allowing us to stay a step ahead of the bad actors.

Evaluating Machine Learning Models: A Case Study

American homes represent a $25 trillion asset class, with very little liquidity. Selling a home on the market takes months of hassle and uncertainty. Opendoor offers to buy houses from sellers, charging a fee for this service. Opendoor bears the risk in reselling the house and needs to understand the effectiveness of different hazard-based liquidity models.

This talk focuses on how to estimate the business impact of launching various machine learning models, in particular, those we use for modeling the liquidity of houses. For instance, if AUC increases by a certain amount, what is the likely impact on various business metrics such as volume and margin?

With the rise of machine learning, there has been a spate of work in integrating such techniques into other fields. One such application area is in econometrics and causal inference (cf. Varian, Athey, Pearl, Imbens), where the goal is to leverage advances in machine learning to better estimate causal effects. Given that a typical A/B test of a real estate liquidity model can run many months in order to fully realize resale outcomes, we use a simulation-based framework to estimate the causal impact on the business (e.g. on volume and margin) of launching a new model.

Online Learning & Custom Decision Services

We have been on a decade long quest to make online interactive learning, a routine fact of life for programmers everywhere. Online learning systems can react quickly to changes in behaviour and have wide ranging applications such as fraud detection, advertising click-through rate prediction, etc. Doing this well has required fundamental research and development of one of the most popular open-source online learning algorithm systems (http://hunch.net/~vw ). Most recently, we have also created a system (http://aka.ms/mwt, http://ds.microsoft.com ) which automates exploit-explore strategies, data gathering, and learning to create stable and routinely useable online interactive learning. For well-framed problems such as content personalization sophisticated interactive online machine learning is now usefully available to programmers. For data scientists who can program, this is a fantastic new tool for solving previously unsolvable problems. As an example: How do you interpret an EEG signal so a user can type?

Markus Cozowicz, Senior Research Software Development Engineer @Microsoft

Automating Inventory at Stitch Fix

Stitch Fix is a personalized styling service for clothing and accessories. Items are selected by a stylist from initial personalized recommendations. The client keeps the items they love, and returns the rest, while providing detailed feedback. We use machine learning and analytics to power every aspect of our business from personalized recommendations, to inventory management and demand modeling.

In this talk, I’ll focus on the use of machine learning within our inventory forecasting system, which relies on information and feedback captured throughout the user lifecycle to predict the flow of apparel and accessories between vendors, warehouses and clients. This problem domain has multiple metrics to measure success and for which to optimize, which makes it challenging. In highlighting the modular components of our forecasting framework, I will illustrate the architecture we have developed in-house.

While describing our use of Bayesian methods to predict which items a stylist will pick to send a client from a ranked list of personalized recommendations, I will give guidance on how this technique can be applied more generally to similar examples of beta binomial regressions. As this component is critical to our inventory forecast of quantity over time, we update the priors daily based on new evidence of stylists’ behavior. This detailed explanation of how machine learning has been applied to determining parameters of our physical process inventory models illustrates the blend of techniques used to accurately capture the variance in stock quantity over time by the inventory optimization team at Stitch Fix.