Presentation: Engineering Systems for Real-Time Predictions @DoorDash

This presentation is now available to view on InfoQ.com

Watch videoWhat You’ll Learn

-

Understand the most common problems that come with using machine learning in practice.

-

Gain a better understanding of moving from algorithms to real-world products.

-

Learn some of the tools and techniques DoorDash uses to overcome some of these problems and ship their prediction service.

Abstract

Today, applying machine learning to drive business value in a company requires a lot more than figuring out the right algorithm to use; it requires tools and systems to manage the entire machine learning product lifecycle. For instance, we need systems to manage data pipelines, to monitor model performance and detect degradations, to analyze data quality and ensure consistency between training and prediction environments, to experiment with different versions of models, and to periodically retrain models and automatically deploy them.

At DoorDash, an on-demand logistics company, we fulfill deliveries on a dynamic marketplace, which requires extensive use of real-time predictions. Through many iterations of applying machine learning in our products, we identified solutions to address the above problems and built these into our machine learning platform. This has dramatically reduced the cost of integrating machine learning into our products, saved us weeks of development time, and allowed us to use ML in new product areas.

In this talk, we will present our thoughts on how to structure machine learning systems in production to enable robust and wide-scale deployment of machine learning and share best practices in designing engineering tooling around machine learning.

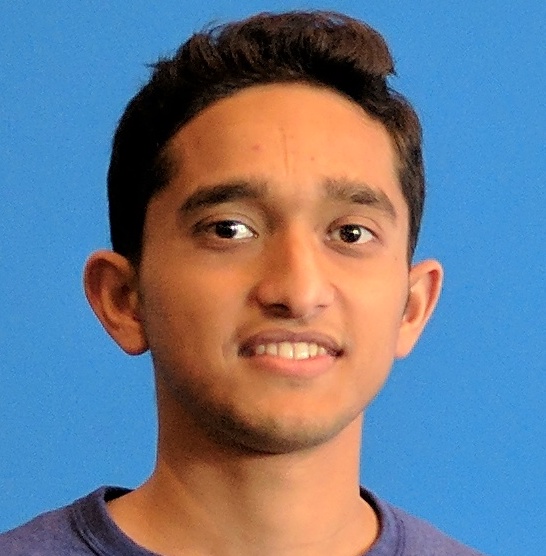

QCon: Can you describe the machine learning platform you have leverage at DoorDash?

Raghav: We built our system around common machine learning open source libraries in Python like SciKit-Learn, LightGBM, and Keras. We have a microservices architecture also built in Python which includes a prediction service that handles all the predictions and a features service. All the services are hosted on AWS.

QCon: Can you briefly describe your real-time prediction system?

Raghav: Our Prediction system responds to HTTP/RPC requests, it accesses a model store to fetch the right model to use and obtains features from a features service.

There are two types of features are used for predictions

-

Real Time features about a delivery. These are things such as how many items does this delivery contain or what time/day of the week is it right now. These features are calculated about the delivery and passed into the system.

-

Batch Aggregate features which are pre-calculated and exposed through the features-service

So, for example, to predict ETAs, for every delivery, we make an HTTP/RPC request to the prediction service which knows how to fetch the model, use these features, and makes the prediction.

QCon: In your abstract, you talk about going through iterations of models. How do you go about testing and comparing your models at DoorDash?

Raghav: We use two layers of testing.

Before launching a model, we use a shadow set up, where we don’t use the model to change the product. Instead, we measure the predictions against a current model which is running. This helps us to determine the accuracy of the model being tested in production. This is the first layer of testing.

The second layer of testing is an a/b test choosing amongst the multiple models available. We start using the model in the actual product. We measure the performance and also look at the overall product metrics, for example, engagement metric (or other user metrics).

QCon: What do you want the audience to take-away from your talk?

Raghav: The biggest take away would be to understand the common problems encountered when implementing machine learning in real-world products. I plan to also discuss a few ideas on designing systems to overcome these problems and thereby ship more machine learning models in practice.

An example of a common problem is discrepancy between training and production environments. Models are often trained offline and when you use it in production, the feature distributions between the two environments could be different and that would affect the accuracy of the predictions. I will go through how the systems we built help us solve these issues

Similar Talks

Scaling DB Access for Billions of Queries Per Day @PayPal

Software Engineer @PayPal

Petrica Voicu

Psychologically Safe Process Evolution in a Flat Structure

Director of Software Development @Hunter_Ind

Christopher Lucian

Not Sold Yet, GraphQL: A Humble Tale From Skeptic to Enthusiast

Software Engineer @Netflix

Garrett Heinlen

Let's talk locks!

Software Engineer @Samsara

Kavya Joshi

PID Loops and the Art of Keeping Systems Stable

Senior Principal Engineer @awscloud

Colm MacCárthaigh

Are We Really Cloud-Native?

Director of Technology @Luminis_eu

Bert Ertman

The Trouble With Learning in Complex Systems

Senior Cloud Advocate @Microsoft

Jason Hand

How Did Things Go Right? Learning More From Incidents

Site Reliability Engineering @Netflix

Ryan Kitchens

Graceful Degradation as a Feature

Director of Product @GremlinInc